Can You Trust That AI Vendor? Use the CSA to find out

I’ve been around the block a few times and sat on both sides of the table when it comes to evaluating software vendors. One topic that is guaranteed to get both sides hot under the collar is addressing security concerns. With so many AI startups getting established every day it can be daunting task knowing who you can trust.

I’ve been around the block a few times and sat on both sides of the table when it comes to evaluating software vendors. One topic that is guaranteed to get both sides hot under the collar is addressing security concerns. With so many AI startups getting established every day it can be daunting task knowing who you can trust.

As a purchaser you should feel that the service provider you choose should look after your data as if it were your newborn child. In this article we’ll explore, how you develop the trust and confidence that your vendor is going to be your responsible babysitter and not invite her boyfriend round with a crate of beer.

Purchasing Power

As someone who has spent the last 15 years developing software for enterprise customers the first point from my perspective is that large enterprises have a huge amount of leverage in any deal with a small startup or scale up. I’m not advocating that the imbalance of power should be used to negotiate price or demand unreasonably terms, but I do firmly believe that it should be used to obtain clarity and evidence that the vendor takes security seriously, so that you can formalise an engagement understanding the risks that you are facing - and there will always be some.

The longest security review I remember was about 6 months long and it was a long hard slog. We went through meetings, questionnaires, provided evidence of reports and screenshots of setting, architectural details and we even made changes to the product to address specific concerns. Ultimately it was worthwhile - our relationship started on a solid foundation of trust, the product was better for it and our customer was happy they knew exactly what they were getting into and we were happy that we had a great new customer that ultimately remained a customer for many years.

What to care about?

-

The main concerns that I would have are:

-

How likely is it that my company data will misappropriated and/or lost?

-

How robust / resilient is the service from attacks?

-

Where is the data located?

-

How reliable is the service going to be for all the ways that we want to use it?

-

What happens if the service suffers an incident?

For me it’s about making sure that we get continuity of service and that the data is protected to a level that is appropriate the type of data.

There will obviously be concerns specific to each organisation, but from a security point of view I wouldn’t be concerned about things like: whether the RBDMS is open-source or closed-source, whether the infrastructure was on GCP, AWS or Azure or how much memory was allocated to serverless functions. These are all issues which whilst may indirectly impact performance and reliability should be the responsibility of the vendor to manage without interference.

CSA as a place to start

If you’re considering a cloud based vendor where the service is provided to you ( rather than something that is implemented in your own corporate cloud), a good place to start is to see if the vendor has a listing in the CSA ( Cloud Security Alliance) Star Registry. As of writing the following AI services did not have listings:

Now the observant among you will notice that the vendors that do have entries have generally been around for a while - in fact to be honest the list was so sparse that I had to add in some vendors that just had AI in their offering. Even the likes of OpenAI only made their submission last year so it seems the AI sector as a whole is somewhat slow to get involved.

Having been through the submission process twice before at Cloudapps, I can say that it takes time and thought to make a submission but with VC’s throwing money at anything that promises AI it’s disappointing not to see more vendors on the registry.

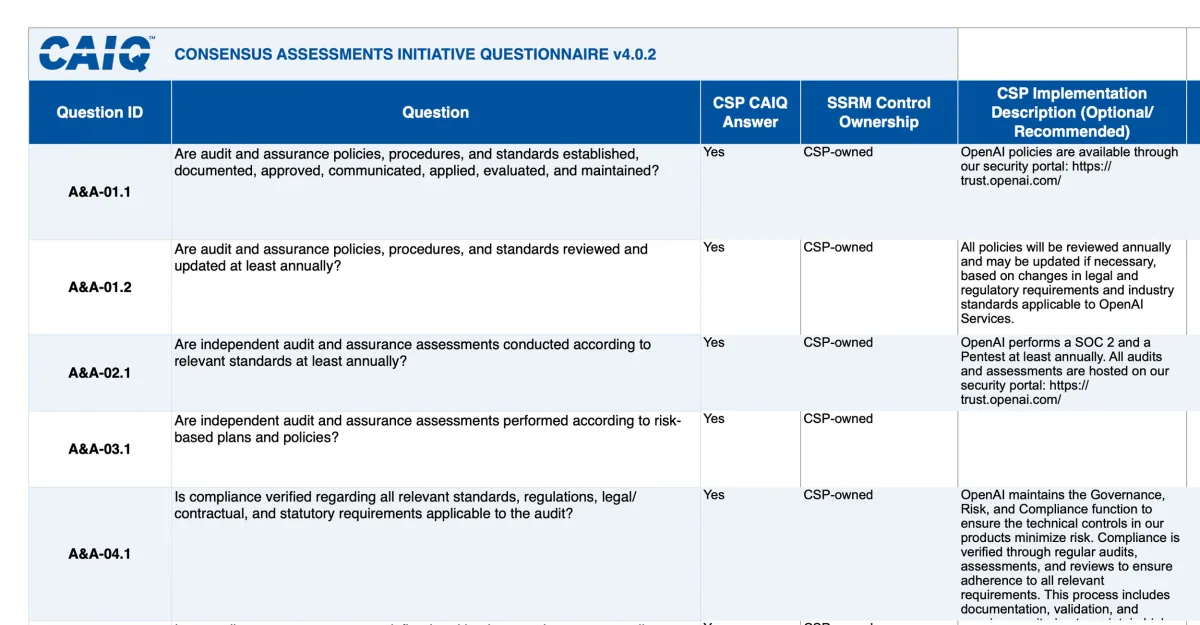

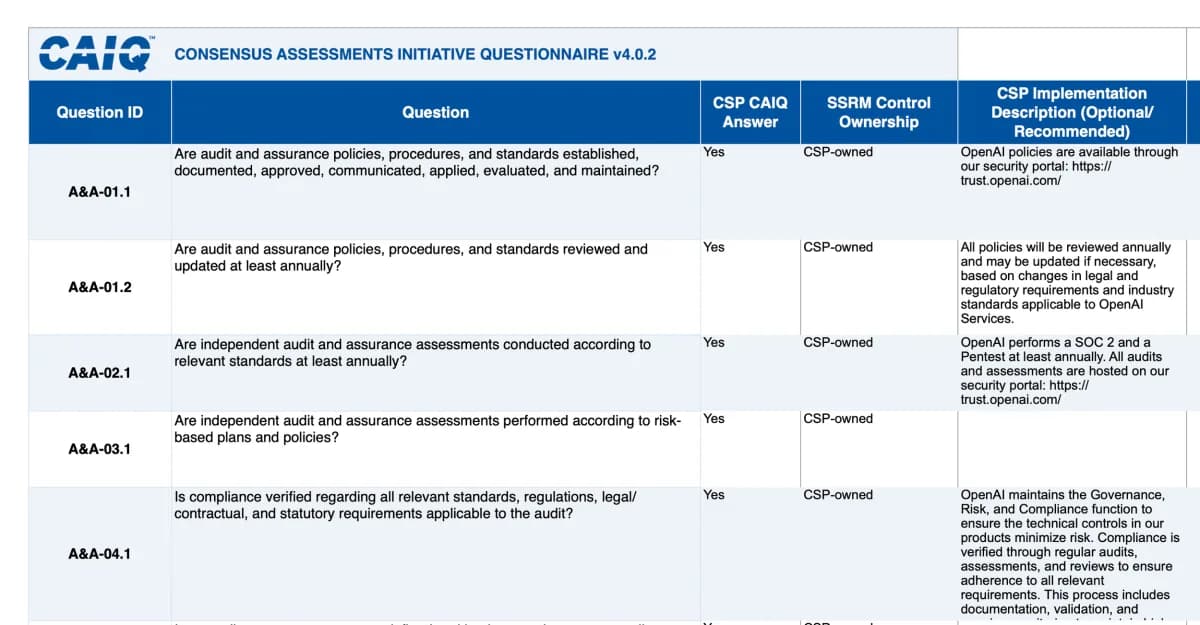

The idea is fairly straightforward. You ( the vendor) answer around 250 questions related to your working practices, policies and procedures related to the delivery and development of your service. Once your questions have been answered you then submit it to the registry which is publicly accessible so anyone - yes anyone can see it.

Submissions make self-assessed or audited by a 3rd party.

If your vendor has made a submission, then great sit back with a cup of coffee and review their responses. It’s unrealistic to expect a vendor to “tick” every single box - but it should give you a sense of how professional the vendor is.

Snippet of OpenAI’s CSA submission

Tailor the Framework

If I was a considering purchasing from a vendor and wanted to evaluate their security posture I would start by taking the CAIQ framework and cherry picking the questions that I cared about to produce a tailored master list. This would become the security scorecard upon which all vendors will be measured.

Obviously if the vendor has made a CSA submission then you have the answers to the questions and you may decide for certain critical questions that you require evidence to back up the claims in the submission for example penetration test reports. This would be particularly prudent if the submission has not been independently audited or the submission was made several years ago.

Engage with your vendors

If the vendor has not made a submission to CSA, then send them your tailored questions in the same format. In all cases you should request any additional evidential information. Vendors should expect this level of scrutiny from enterprise organisations. Once the responses are collected you can then review them.

If the responses aren’t clear sometimes a call with the vendors’ product team may be necessary and I think this is a good litmus test of how serious they are. If you identify a weakness in their security profile then you should expect that feedback to be taken seriously and the vendor to take actions to resolve it. This sort of response makes working with small resource limited startups worthwhile and can lay the foundations of successful partnership where the enterprise customer has influence over the direction of the product roadmap making it a win win.

Conclusion

Working with any new vendor carries risk, no matter how well established they are or how big they are. From a security point of view that shouldn’t matter. Vendors should expect you to ask pointed questions about the technical architecture of their solution - if they don’t then that should be a red flag. Using a framework like the one from the CSA puts structure to your security evaluation process allowing you compare vendors fairly on criteria that are important to your organisation. Done right you’ll find the vendors who are willing to go the extra mile and that’s something worth having.

If your team is struggling to evaluate AI vendors or you want a sanity check on your existing shortlist, we can help you build a review process that doesn’t waste time or let risk slip through. Drop us a line.