research

12 articles in this category.

Building the Future: How AI is Architecting the Next Generation of Construction

We are on the verge of a transformation so profound it will redefine every phase of a project's lifecycle. This revolution is powered by Artificial Intelligence (AI), and its blueprint is the fully connected, intelligent construction site

Learning to Walk with AI - Automating Workflows

Walking is all about automating business workflows with a sprinkling of AI to help move things along. There are things that we do within our professional lives that are repeatable and predictable. Recording contact details from business cards, recording monthly expenses, following up on missed phone calls, issuing invoices and onboarding clients or employees.

Getting Started with AI in Sales for Small Busineses

In my previous post, we examined how small businesses can begin using AI in three stages: Crawling, Walking, and Running. In this article, we’ll focus on starting with crawling, particularly in Sales and Marketing, as that is where I have spent most of my professional life. Crawling is about enhancing personal productivity and gaining a clearer understanding of what AI can achieve within your organisation. We’ll explore AI assistants and several purpose-built tools that can be easily and affordably used for many sales-related tasks.

Small Business AI Playbook

If you sometimes feel like a rabbit in the headlights when it comes to AI, you’re not alone. I know that feeling well — it’s exactly how I felt eight years ago when I was first tasked with figuring out whether AI could help the business I was working in. Small businesses like yours are in a unique position. We can use AI to become more efficient, more competitive, and grow faster. In this post, we’ll explore how to get started

Understanding Embeddings

The rise of vector databases has defined a whole new sector in technology. Whether it’s Pinecone, Qdrant, Weaviate or ChromaDb, vendors have just appeared on the landscape seemingly out of nowhere and now it seems like every other post on your favourite social media channel is talking about these things like they’re a critical part of technology infrastructure. What’s the thing that underpins this technology and makes it all possible? Embeddings. In this article we’ll explore where they came from, what they are and why they’re so valuable when it comes to creating personalised AI solutions.

Do things that don’t scale

Paul Graham, the founder of Y Combinator is famously known for saying to startups do things that don’t scale. I share this same philosophy when it’s applied to new software projects. That’s not to say that I don’t care about scaling, I do, but in the overall set of priorities scaling concerns doesn’t even make it into the top 3. In this post I’ll share with you the formula that I use when starting new projects and how I arrange my development priorities so that I can get a product to market in the shortest time possible and be able to continue to improve and develop that product as it’s usage gains traction.

How to Deconstruct Your Moodle Soup

A client once described their data situation to me as a "Data Soup"—and it was the perfect description. It's a jumble of valuable feedback from course participants, scattered across hundreds of individual files, mixed with inconsistent questions, and buried so deep in the system that it's almost impossible to use. It’s a common problem for organisations that rely on Moodle’s powerful course-delivery features but find its data-export capabilities lacking.In this article, I'll walk you through how we deconstructed that soup. We'll cover how we extracted the good stuff, cleaned it up, and turned it into a practical AI analytics tool that can answer complex questions in plain English.

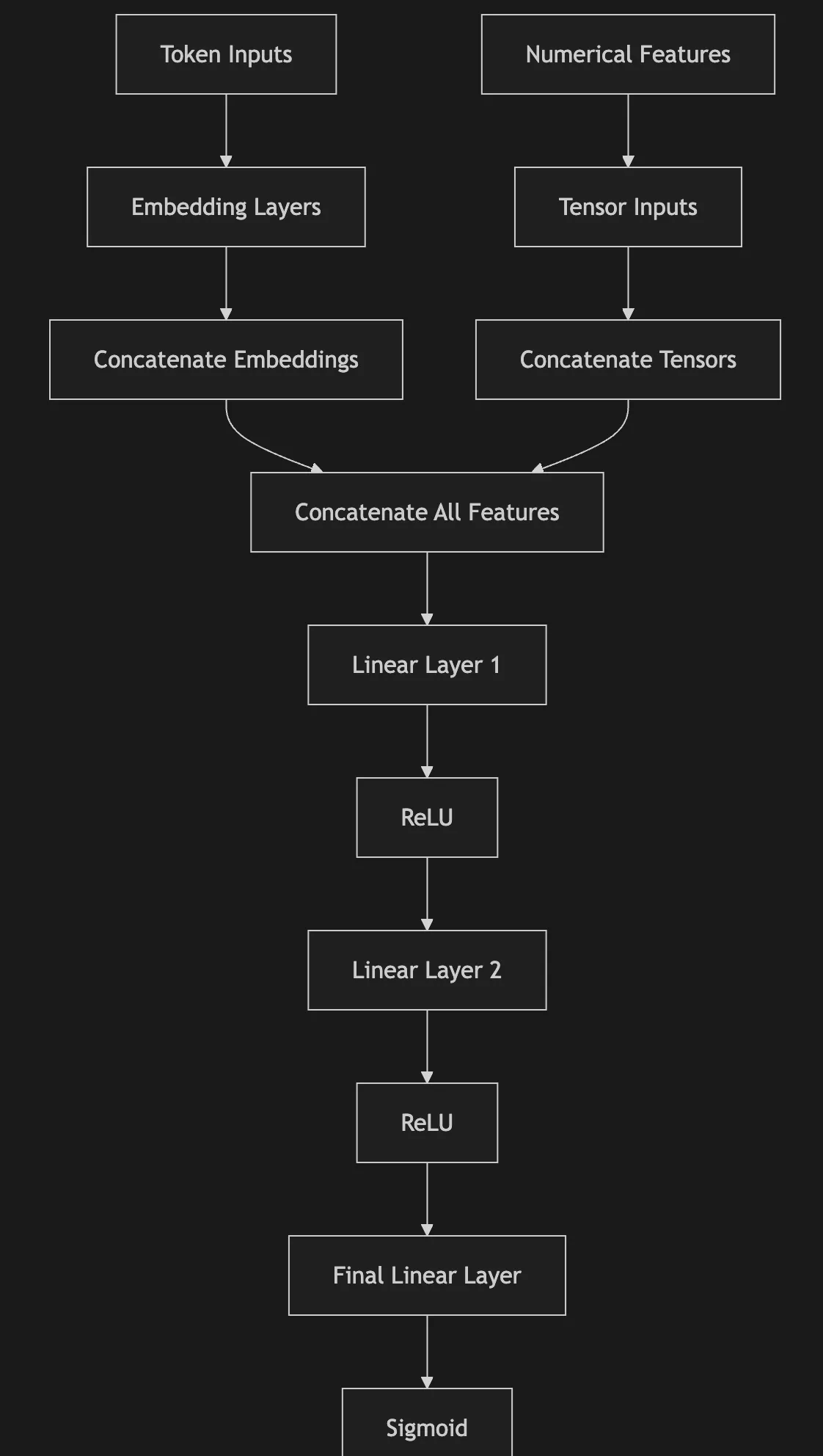

Let’s build a Salesforce Deal Predictor (Part 2)

We demonstrate how to go about creating an AI system that can predict the outcomes of Salesforce opportunities using a custom model built with Pytorch from the ground up.

Let’s build a Salesforce Deal Predictor (Part 1)

Salesforce has been the darling of the CRM world for as long as I can remember. Businesses of all shapes and sizes use it to manage their sales processes. For some time their Einstein Opportunity Scoring product has been predicting outcomes of deals, but anecdotally the clients I have spoken to have been underwhelmed by it’s performance. So we’re going to put that right in this article and explore an alternative way of generating predictions on opportunities … the point is not so much to give you an assignment to create your own solution, but rather to give you a sense of how the solution is a combination of data and AI and what’s important in any custom model AI project that you embark on.

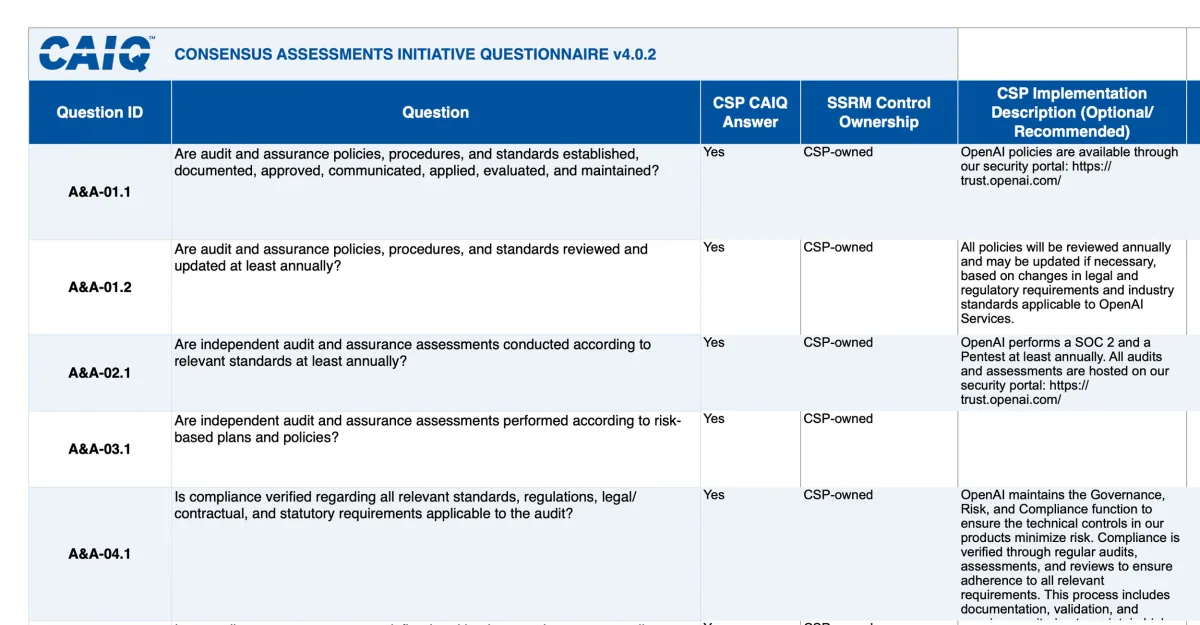

Can You Trust That AI Vendor? Use the CSA to find out

I’ve been around the block a few times and sat on both sides of the table when it comes to evaluating software vendors. One topic that is guaranteed to get both sides hot under the collar is addressing security concerns. With so many AI startups getting established every day it can be daunting task knowing who you can trust.

How to choose the right AI solution

There’s a lot of pressure right now to do something with AI, but picking the wrong solution can be costly, distracting, and damaging. Here's the framework I’ve used over 20 years to separate hype from substance and evaluate solutions on the strengths of the benefits to the business.

How AI Actually Works

You need a product ICP to minimise churn

Every now and then, you talk to someone who really gets it. Someone who not only uses your product, but also sees its potential and can articulate meaningful ideas for where it should go next. When that happens, it’s like finding gold. You leave the call energised, full of ideas, and importantly with a clear sense of direction.

How I make and deliver a roadmap

A roadmap delivered on time, that meets your customers’ expectations means that you can expect to see a reduction in churn, an increase in referrals and more leads - ultimately leading to lower CAC (Customer Acquisition Costs) and higher LTV( Life Time Value). In this post I’ll describe the framework that I have developed for my own roadmap planning and delivery

How to make your CRM successfull

Believe it or not I have been involved with Salesforce for the last 20 years, firstly as a customer, then as an SI partner and for the last 15 years as an ISV. During that time I’ve seen plenty of implementations and a lot of them have been pretty ugly and I don’t want yours to be one of them, so I’ve got a list of 10 recommendations that I would like you to consider to help maximise your investment in Salesforce.

How SaaS will die

For 20 years I’ve been working with SaaS systems - most notably Salesforce. A few weeks ago I started seeing posts on LinkedIn making claims that SaaS will Die. At the time I dismissed it as click bait, but I think there might be something in it and I can see a future where the traditional SaaS model is obsolete. In this post we’ll explore how this might play out and what you can do to adapt to a new SaaS-free world.

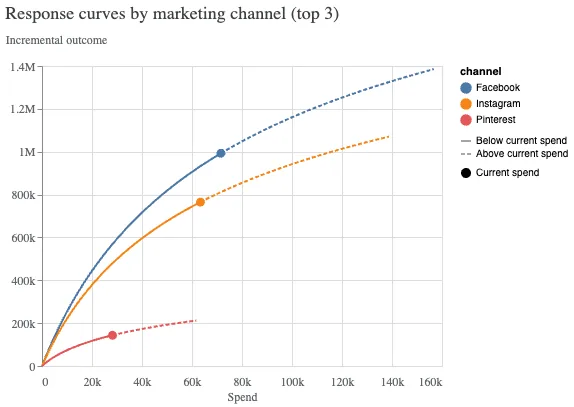

How to optimise your marketing spend with Meridian

Optimising Marketing Spend with Google's MeridianGoogle's new Marketing Mix Model, Meridian, offers a fresh approach to understanding and optimising marketing investments. In my latest article, I explore how Meridian leverages Bayesian inference to provide actionable insights into channel performance, ROI, and budget allocation.While it requires some technical setup, the potential benefits for strategic decision-making are substantial.If you're interested in data-driven strategies to enhance your marketing effectiveness, this piece provides a practical overview.

Why Your Sales Forecasting Fails: The Critical Role of Time in CRM Data and Machine Learning

Most sales teams are sitting on a goldmine of data—but their machine learning models are digging in the wrong place.In this article, we reveal why sales forecasting often falls short, the hidden flaw in CRM data that’s undermining your insights, and how to fix it.

Introduction to Lead Scoring

Lead scoring is a vital strategy within Conversion Rate Optimisation (CRO), enabling businesses to assess the potential of leads converting into customers. By attributing scores based on key factors such as demographic data, behavioural patterns, and engagement levels, businesses can prioritise leads most likely to provide a substantial return on investment.

Deep Dive of Google’s TSMixer

A deep dive into Google’s TSMixer paper

Building an AI SaaS product on a shoestring budget with aws serverless (Part 3)

Part 3. Of our series on building an AI SaaS product on a shoestring budget.

Introduction to Marketing Mix Modelling (MMM)

Marketing Mix Modelling (MMM) is a sophisticated statistical analysis method that allows marketers to evaluate the effectiveness of various marketing strategies and their direct impact on overall business performance.

Are OEM Partnerships worth it?

If you’re an early stage startup SaaS business thinking about entering into an OEM agreement then I have some thoughts from personal experience that I hope you’ll find interesting and give you some food for thought. It goes back a few years when the SaaS business I was involved with was still very much in a start-up mode and everything we did was all about growth. One day I was in a management meeting and we were discussing the fact that we had been approached by another SaaS vendor operating in a different market who was interested in using part of our product and adding it to their own portfolio under an OEM arrangement. It sounded like to good an opportunity to to turn down, but read on because not everything turned out rosy.

Are Your Sales Metrics Deceiving You?

Deal Cycle Time and Win Rates are critical metrics that help explain the cost of customer acquisition. When they are measured on false assumptions, they will inhibit efforts to make the best decisions for the business. I discuss how this can happen, what the potential consequences are and how to avoid it.

Building an AI SaaS product on a shoestring budget with aws serverless (Part 2)

Part 2. Of our series on building an AI SaaS product on AWS Serverless

How to make better decisions with AI simulations

Have you ever wondered what your world would be like if you could just wind back the clock, make a different decision about something you weren’t happy with and seeing how things played out? Wouldn’t it be wonderful if only we could try out different scenarios before committing to them? Well I have good news my friends, because this is an area where machine learning can really come to your aid and in this article we’ll take a look at what you can do and why it’s so useful.

Win more with eXplainable AI

AI has helped us to extract more meaningful insights from sales data, enabling us to improve the processes that drive more sales. When eXplainable AI (XAI) is added to the mix, you’ll find that suddenly you’re able to answer questions like “Why will I win this deal?” rather than “Am I going to win this deal?”.

9 Pitfalls to avoid predicting Sales Opportunities

In this post, we’ll explore some of the common challenges that can derail your efforts to predict sales opportunities accurately. Drawing from experience, I’ll highlight potential solutions to avoid these pitfalls and build a more reliable system.

Our Top 7 Forecasting Models We Benchmarked For Monash

Top 7 Forecasting Models We Benchmarked For Monash

Building an AI SaaS product on a shoestring budget with aws serverless (Part 1)

In this post we’ll show you what you need to consider when building an enterprise class AI SaaS product from someone who’s been there and done it.

Revin: What really grinds my gears

A review of Revin - Reversible Instance Normalization used in time series forecasting

NeurIPs Ariel Challenge: What's it like to take part in a Kaggle competition?

An opinion piece on participating in a Kaggle competition

Multivariate Time Series models: Do we really need them?

A comparison of local, global, univariate and multivariate configurations using the DLinear and NLinear models

LTSF-Linear: Embarrassingly simple time series forecasting models

A review of the 2022 Paper Are Transformers Effective for Time Series Forecasting that introduced DLinear and NLinear models

Pytorch vs MXNet: Which is faster?

An analysis of the computational efficiency of Pytorch and MXNet

The F1 Score: Time Series Model Championships

A ranking system of time series models based on the Monash dataset benchmarks using the mase metric and the formula 1 scoring system.

GenAI: I don't care what it is and you shouldn't either

A look at the history of AI and how the terms AI, Machine Learning, Deep Learning and Generative AI have evolved over time.

1Cycle scheduling: State of the art timeseries forecasting

How to get state of the art timeseries forecasting results using machine learning with my variant of DeepAR and 1Cycle Scheduling

Super-convergence: Supercharge your Neural Networks

A look at 1Cycle scheduling, one of my favourite techniques at improving model performance and practical guidance on how to use it

A fistful of MASE: Deconstructing DeepAR

A deep dive into the GluonTS DeepAR neural network model architecture for time series forecasting and an ablation study of the covariates.

Predicting covariates: Is it a good idea?

A study which evaluates the effectiveness of predicting covariates in LSTM Neural Networks for Time Series Forecasting

Message from Gareth

A bit about founder Gareth Davies and why he created Neural Aspect.

Teacher Forcing: A look at what it is and the alternatives

Review of teacher forcing, free running, scheduled-sampling, professor forcing and attention forcing for training auto-regresssive neural networks

Human-level control through deep reinforcement learning

Recreating the experiments from the classic 2015 Deepmind Paper by Mnih et al.: Human-level control through deep reinforcement learning

Revisiting Playing Atari with Deep Reinforcement Learning

Recreating the experiments from the classic DQN Deepmind paper by Mnih et al.: Playing Atari with Deep Reinforcement Learning

A discussion about the future of AI in 2024

From the Cloudapps Winning with AI podcast on YouTube: A discussion with Joshua Harris about the future of AI in 2024

A discussion about the history of AI

From the Cloudapps Winning with AI podcast on YouTube: A discussion with Joshua Harris about the history of AI